A giant in the field of taxonomy is the Sweedish scientist Carl Linnaeus (1707-1778). Linnaeus developed the modern binomial nomenclature to name organisms. Two names are given (often in Latin), genus then species, both italicized with the genus capitalized and the species not. For example, the readers of this blog are Homo sapiens: genus = Homo and species = sapiens. My dog Suki is a member of Canis lupus. Her case is complicated, since the domestic dog is a subspecies of the wolf, Canis lupus familiaris, but because dogs and wolves can interbreed they are considered the same species and to keep things simple (a physicist’s goal, if not a biologist’s) I will just use Canis lupus. Hodgkin and Huxley performed their experiments on the giant axon from the squid, whose binomial name is Loligo forbesi (as reported in Hodgkin and Huxley, J. Physiol., Volume 104, Pages 176–195, 1945; in their later papers they just mention the genus Loligo, and I am not sure what species they used--they might have used several). My daughter Katherine studied yeast when an undergraduate biology major at Vanderbilt University, and the most common yeast species used by biologists is Saccharomyces cerevisiae. The nematode Caenorhabditis elegans is widely used as a model organism when studying the nervous system. You will often see its name shortened to C. elegans (such abbreviations are common in the Linnaean system). Another popular model system is the egg of the frog species Xenopus laevis. The mouse, Mus musculus, is the most common mammal used in biomedical research. I’m not enough of a biologist to know how viruses, such as the tobacco mosaic virus, fit into the binomial nomenclature.

Out of curiosity, I wondered what binomial names Russ hobbie and I mentioned in the 4th edition of Intermediate Physics for Medicine and Biology. It is surprisingly difficult to say. I can’t just search my electronic version of the book, because what keyword would I search for? I skimmed through the text and found these four; there may be others. (Brownie points to any reader who can find one I missed and report it in the comments section of this blog.)

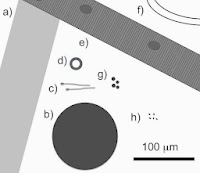

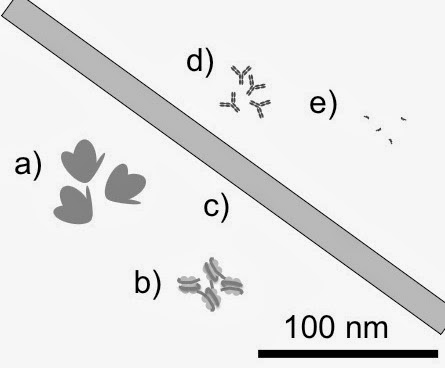

- Escherichia coli (E. coli): the most famous model system in all of biology. The name of this bacteria species appears on page 2 of IPMB, and in Fig. 1.1. Also it appears on pages 29 and 71 (in homework problems), on page 85 in Table 4.2, and on pages 96 and 109.

- Aquaspirillum magnetotacticum: a type of magnetotactic bacteria, shown in Fig. 8.25 on page 217 of IPMB. Apparently this organism has been reclassified as Magnetospirillum magnetotacticum.

- Chara corallina: I had forgotten about this one until I stumbled upon it as I browsed through the book. In IPMB, it appears on page 225, in the title of a journal article among the list of references for Chapter 8. It’s a type of green algae that produces a biomagnetic signal.

- Drosophila melanogaster was mentioned last month in this blog. It appears on page 239 of IPMB, when discussing potassium channels.

The Encyclopedia of Life (EOL) began in 2007 with the bold idea to provide “a webpage for every species.” EOL brings together trusted information from resources across the world such as museums, learned societies, expert scientists, and others into one massive database and a single, easy-to-use online portal at EOL.org.

While the idea to create an online species database had existed prior to 2007, Dr. Edward O. Wilson's 2007 TED Prize speech was the catalyst for the EOL you see today. The site went live in February 2008 to international media attention. …

Today, the Encyclopedia of Life is expanding to become a global community of collaborators and contributors serving the general public, enthusiastic amateurs, educators, students and professional scientists from around the world.