|

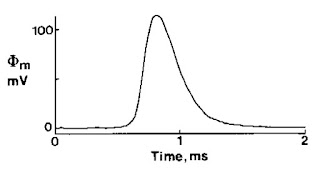

| “The Magnetic Field of a Single Axon.” |

An active nerve axon can be modeled with sufficient accuracy to allow a detailed calculation of the associated magnetic field. Therefore the single axon provides a simple, yet fundamentally important system from which we can test our understanding of the relation between biomagnetic and bioelectric fields. The magnetic field produced by a propagating action potential has been calculated from the transmembrane action potential using the volume conductor model (1). The purpose of this paper is to verify that calculation experimentally. To make an accurate comparison between theory and experiment, we must be careful to correct for all systematic errors present in the data.As the introduction notes, the volume conductor model was described in reference (1), which is an article by Jim Woosley, Wikswo and myself (“The Magnetic Field of a Single Axon: A Volume Conductor Model,” Mathematical Biosciences, Volume 76, Pages 1–36, 1985). I have discussed the calculation of the magnetic field previously in this blog, so today I’ll restrict myself to the experiment.

To test the volume conductor model it is necessary to measure the transmembrane potential and magnetic field simultaneously. An experiment performed by Wikswo et al. (2) provided preliminary data from a lobster axon, however the electric and magnetic signals were recorded at different positions along the axon and no quantitative comparisons were made between theory and experiment. In the experiment reported here, these limitations were overcome and improved instrumentation was used (3–5).

I was not the first to measure the magnetic field of a single axon. Wikswo’s student, J. C. Palmer, had made preliminary measurements using a lobster axon; reference (2) is to their earlier paper. One of the first tasks Wikswo gave me as a new graduate student was to reproduce and improve Palmer’s experiment, which meant I had to learn how to dissect and isolate a nerve. Lobsters were too expensive for me to practice with so I first dissected cheaper crayfish nerves; our plan was that once I had gotten good at crayfish we would switch to the larger lobster. I eventually became skilled enough in working with the crayfish nerve, and the data we obtained was good enough, that we never bothered with the lobsters.

I had to learn several techniques before I could perform the experiment. I recorded the transmembrane potential using a glass microelectrode. The electrode is made starting with a glass tube, about 1 mm in diameter. We had a commercial microelectrode puller, but it was an old design and had poor control over timing. So, one of my jobs was to design the timing circuitry (see here for more details). The glass would be warmed by a small wire heating element (much like you have in a toaster, but smaller), and once the glass was soft the machine would pull the two ends of the tube apart. The hot glass stretched and eventually broke, providing two glass tubes with long, tapering tips with a hole at the narrow end of about 1 micron diameter. I would then backfill these tubes with 2 Molar potassium citrate. The concentration was so high that when I occasionally forgot to clean up after an experiment I would comeback the next day and find the water had evaporated leaving impressive, large crystals. The back end of the glass tube would be put into a plexiglass holder that connected the conducting fluid to a silver-chloride electrode, and then to an amplifier.

One limitation of these measurements was the capacitance between the microelectrode and the perfusing bath. Because the magnetic measurements required that the nerve be completely immersed in saline, I could not reduce the stray capacitance by lowering the height of the bath. This capacitance severely reduced the rate of rise of the action potential, and to correct for it we used “negative capacitance.” We applied a square voltage pulse to the bath, and measured the microelectrode signal. We then adjusted the frequency compensation knob on the amplifier (basically, a differentiator) until the resulting microelectrode signal was a square pulse. That was the setting we used for measuring the action potential. Whenever I changed the position of the electrode or the depth of the bath, I had to recalibrate the negative capacitance.

To record the transmembrane potential, I would poke the axon (easy to see under a dissecting microscope) with a microelectrode. Often the tip of the electrode would not enter the axon, so I would tap on the lab bench creating a vibration that was just sufficient to drive the electrode through the membrane. Usually I had the output of the microelectrode amplifier go to a device that output current with a frequency that varied with the microelectrode voltage. I’d put this current through a speaker, so I could listen for when the microelectrode tip was successfully inside the axon because the DC potential would drop by about 70 mV (the axon’s resting potential) and therefore the pitch of the speaker would suddenly drop.

Next week I will continue this story, describing how we measured the magnetic field.

|

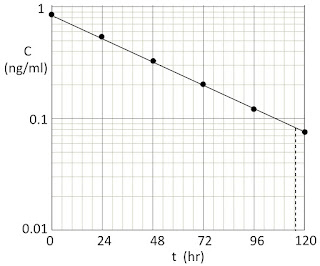

| The measured transmembrane potential. |