In my last blog entry, I began the story behind “

The Magnetic Field of a Single Axon: A Comparison of Theory and Experiment” (

Biophysical Journal, Volume 48, Pages 93–109, 1985). I wrote this paper as a graduate student working for

John Wikswo at

Vanderbilt University. (I use the first person “I” in this blog post because I was usually alone in a windowless basement lab when doing the experiment, but of course Wikswo taught me how to do everything including how to write a scientific paper.) Last week I described how I measured the transmembrane potential of a crayfish axon, and this week I explain how I measured its magnetic field.

|

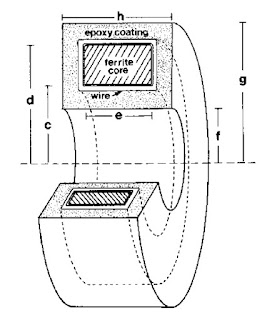

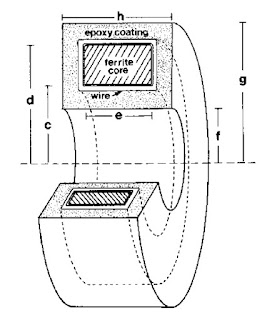

A toroid used to measure

the magnetic field of a single axon. |

The magnetic field was recorded using a wire-wound toroid (I have talked about winding toroids

previously in this blog). Wikswo had obtained several

ferrite toroidal cores of various sizes, most a few millimeters in diameter. I wound 50 to 100 turns of

40-gauge magnet wire onto the core using a

dissecting microscope and a clever device designed by Wikswo to rotate the core around several axes while holding its location fixed. I had to be careful because a kink in a wire having a diameter of less than 0.1 mm would break it. Many times after successfully winding, say, 30 turns the wire would snap and I would have to start over. After finishing the winding, I would carefully

solder the ends of the wire to a coaxial cable and “pot” the whole thing in epoxy. Wikswo—who excels at building widgets of all kinds—had designed

Teflon molds to guide the epoxy. I would machine the Teflon to the size we needed using a

mill in the student shop. (With all the concerns about liability and lawsuits these days student shops are now uncommon, but I found it enjoyable, educational, and essential.) Next I would carefully place the wire-wound core in the mold with a Teflon tube down its center to prevent the epoxy from sealing the hole in the middle. This entire mold/core/wire/cable would then be placed under vacuum (to prevent bubbles), and filled with epoxy. Once the epoxy hardened and I removed the mold, I had a “toroid”: an instrument for detecting action currents in a nerve. In 1984, this “neuromagnetic current probe” earned Wikswo an

IR-100 award. The basics of this measurement are described in Chapter 8 of

Intermediate Physics for Medicine and Biology.

In Wikswo’s original experiment to measure the magnetic field of a frog sciatic nerve (the entire nerve; not just a single axon), the toroid signal was recorded using a

SQUID magnetometer (see Wikswo, Barach, Freeman, “

Magnetic Field of a Nerve Impulse: First Measurements,”

Science, Volume 208, Pages 53–55, 1980). By the time I arrived at Vanderbilt, Wikswo and his collaborators had developed a low-noise, low-input impedance amplifier—basically a current-to-voltage converter—that was sensitive enough to record the magnetic signal (Wikswo, Samson, Giffard, “

A Low-Noise Low Input Impedance Amplifier for Magnetic Measurements of Nerve Action Currents,”

IEEE Trans. Biomed. Eng. Volume 30, Pages 215–221, 1983). Pat Henry, then an instrument specialist in the lab, ran a cottage industry building and improving these amplifiers.

To calibrate the instrument, I threaded the toroid with a single turn of wire connected to a current source that output a square pulse of known amplitude and duration (typically 1 μA and 1 ms). The toroid response was not square because we sensed the rate-of-change of the magnetic field (

Faraday’s law), and because of the

resistor-inductor time constant of the toroid. Therefore, we had to adjust the signal using “frequency compensation”;

integrating the signal until it had the correct square shape.

The amplifier output was recorded by a digital

oscilloscope that saved the data to a tape drive. Another of my first jobs at Vanderbilt was to write a computer program that would read the data from the tape and convert it to a format that we could use for signal analysis. We wrote our own signal processing program—called OSCOPE, somewhat analogous to

MATLAB—that we used to analyze and plot the data. I spent many hours writing subroutines (in

FORTRAN) for OSCOPE so we could calculate the magnetic field from the transmembrane potential, and vice versa.

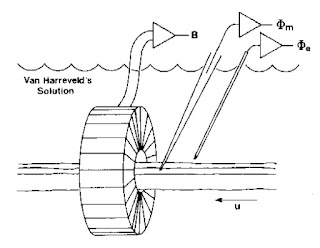

|

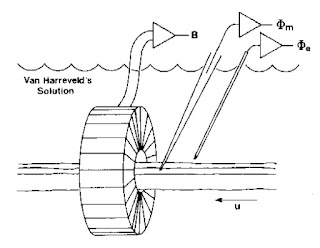

An experiment to measure the

transmembrane potential, the extracellular potential,

and the magnetic field of a single axon. |

Once all the instrumentation was ready, the experiment itself was straightforward. I would dissect the ventral nerve cord from a crayfish and place it in a plexiglass bath (again, machined in the student shop) filled with saline (or more correctly, a version of saline for the crayfish called van Harreveld’s solution). The nerve was gently threaded through the toroid, a microelectrode was poked into the axon, and an electrode to record the extracellular potential was placed nearby. I would then stimulate the end of the nerve. It was easy to excite just a single axon; the nerve cord split to go around the esophagus, so I could place the stimulating electrode there and stimulate either the left or right half. In addition, the threshold of the giant axon was lower than that of the many small axons, so I could adjust the stimulator strength to get just one giant axon.

|

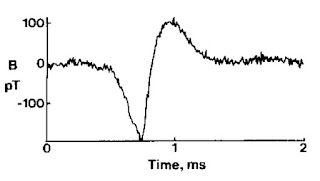

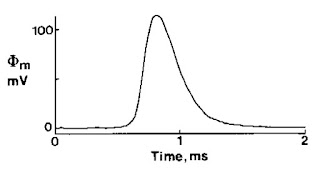

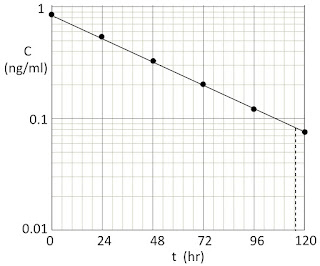

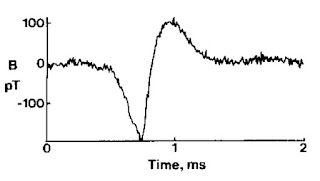

| The magnetic field of a single axon. |

When I first started doing these experiments, I had a horrible time stimulating the nerve. I assumed I was either crushing or stretching it during the dissection, or there was something wrong with the saline solution, or the epoxy was toxic. But after weeks of checking every possible problem, I discovered that the coaxial cable leading to the stimulating electrode was broken! The experiment had been ready to go all along; I just wasn’t stimulating the nerve. Frankly, I now believe it was a blessing to have a stupid little problem early in the experiment that forced me to check every step of the process, eliminating many potential sources of trouble and giving me a deeper understanding of all the details.

As you can tell, a lot of effort went into this experiment. Many things could, and did, go wrong. But the work was successful in the end, and the paper describing it remains one of my favorites. I learned much doing this experiment, but probably the most important thing I learned was perseverance.